Scalable APIs with Microservices

When building apis, the most common thought that occurs during design phase is, when we have a lot of traffic hitting the apis will my application scale? One of the immediate solution we think of is lets just use auto scale and utilise more server resources to keep up with the increased demand.

Using monoliths to scale applications will always be difficult to profile for performance and benchmark for scale. So then using microservices is a good choice to maintain smaller units which can be used to perform specific functions and scale when needed.

Scalable APIs with Microservices

When building apis, the most common thought that occurs during design phase is, when we have a lot of traffic hitting the apis will my application scale? One of the immediate solution we think of is lets just use auto scale and utilise more server resources to keep up with the increased demand.

Using monoliths to scale applications will always be difficult to profile for performance and benchmark for scale. So then using microservices is a good choice to maintain smaller units which can be used to perform specific functions and scale when needed.

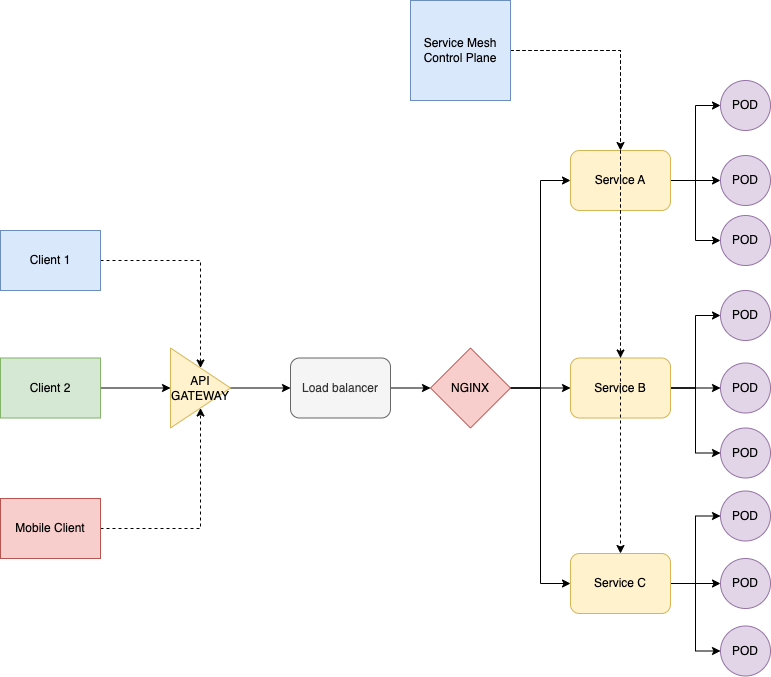

API Gateways

While distributing the functionality into multiple microservices might be a good idea for managing scale and cost, it become a difficulty for the frontend to connect to various services which are needed for it to operated coherently. This problem is solved by using a API Gateway in front of the microservices. The API gateway serves multiple functionality, which is to unify the data served by the microservices for ease of frontend consumption, maintain a single facade for managing authentication and authorisation, manage security of clients connecting to the backend, enable a layer to act as a proxy to hide the actual endpoints and finally enable throttling when needed for specific endpoints or clients.

Kubernetes for microservices

The microservices can be deployed on a kubernetes cluster to handle scale of huge magnitude. Containerised microservices can be easily deployed to any cloud provider and setup behind load balancers to allow scale. Each microservice can use its own data store or consume a managed RDMS or Analytics engine service. The services can connect to third party services to consume data which it might need to fulfil the need. The serivces if not behind an api gateway can use an NGINX proxy setup to act as a consolidation point as well.

API Security

While external security can be managed though network firewall or web application firewalls, the internal security of the containers in a cluster needs to be also worked into the solution design to ensure a water tight security setup. A service mesh such as istio can be configured to create security within the container matrix.

Monitoring and Management

For managing and monitoring the apis and to quickly respond to failure events a network and application monitor tool of choice should be used. To log all events occurring across the service mesh a logging engine and analyser should be added. A dashboard for monitoring and alerting to any negative events can help smooth dev-sec-ops.

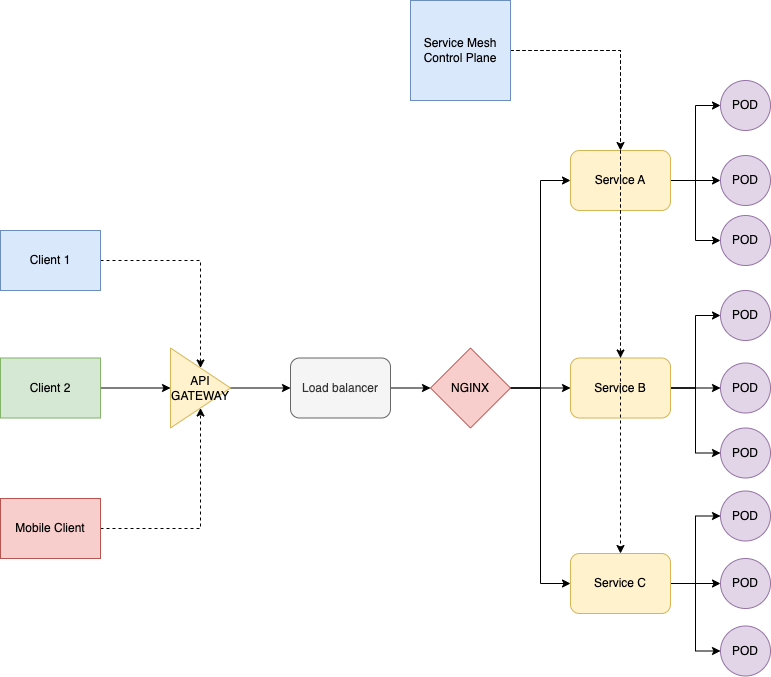

API Gateways

While distributing the functionality into multiple microservices might be a good idea for managing scale and cost, it become a difficulty for the frontend to connect to various services which are needed for it to operated coherently. This problem is solved by using a API Gateway in front of the microservices. The API gateway serves multiple functionality, which is to unify the data served by the microservices for ease of frontend consumption, maintain a single facade for managing authentication and authorisation, manage security of clients connecting to the backend, enable a layer to act as a proxy to hide the actual endpoints and finally enable throttling when needed for specific endpoints or clients.

Kubernetes for microservices

The microservices can be deployed on a kubernetes cluster to handle scale of huge magnitude. Containerised microservices can be easily deployed to any cloud provider and setup behind load balancers to allow scale. Each microservice can use its own data store or consume a managed RDMS or Analytics engine service. The services can connect to third party services to consume data which it might need to fulfil the need. The serivces if not behind an api gateway can use an NGINX proxy setup to act as a consolidation point as well.

API Security

While external security can be managed though network firewall or web application firewalls, the internal security of the containers in a cluster needs to be also worked into the solution design to ensure a water tight security setup. A service mesh such as istio can be configured to create security within the container matrix.

Monitoring and Management

For managing and monitoring the apis and to quickly respond to failure events a network and application monitor tool of choice should be used. To log all events occurring across the service mesh a logging engine and analyser should be added. A dashboard for monitoring and alerting to any negative events can help smooth dev-sec-ops.